Be Warned - Vanity Metrics: Road Map and Vanity Metrics

A few guidelines for Metrics

The metric is used by the team – Agile metrics should not be imposed or measured by management, they should be used voluntarily by agile teams to learn and improve.

The metric is surrounded by conversation – Metrics should not just be numbers, they should be the starting point of a conversation about process and roadblocks affecting the team.

The metric is part of a specific experiment – Metrics should be used to answer a specific question about agile processes, not just measured for the sake of measurement (Vanity Metrics)

The metric is used in tandem with other metrics – Even a great metric, if used alone, might lead to tunnel vision, and incentivise teams to maximize that metric at the expense of all else. Using several metrics together provides a balanced picture of agile activity.

The metric is easy to calculate and understand – Metrics that are overly complex or not fully understood, even if they provide good insights about a team’s work, are not useful in guiding day-to-day activities.

Here are a few ideas for you to consider

North Star Metric

Product success relies on measuring meaningful metrics – a north star. To define the north star – look at how your product delivers value to customers.

For most organisations, the north star metric is usually linked to customer engagement with your product.

It is

A single metric that best captures the core value that your product delivers to customers

Provides clarity and alignment on what the product team needs to be optimizing for and what can be traded off.

Most importantly, it holds the product accountable to an outcome. Examples include

Airbnb: Nights Booked.

Facebook: Daily Active Users.

Quora: Number of questions a user answers.

WhatsApp: Number of messages a user sends.

Escaped Defects

This is the number of bugs discovered after a build or release enters the production environment. The goal would be to get this as close as possible to zero.

Measuring escaped defects can provide a crude measure of deployed software quality.

If the number of escaped defects is high, it can generally come down to a few reasons including

The team is not working at a sustainable pace - they are rushing and hacking to try to get stuff done for some arbitrary deadline which results in reduced quality

Not addressing technical debt - building new functionality on top of unstable or poorly implemented code and not addressing underlining issues will result in more defects

Lack of investment in automated testing or using test-driven development approach - if a team doesn't invest in building unit tests, end to end tests etc then it will become difficult to handle regression testing when new code is added to the code base.

Support

Support Requests

Users might end up with requests which require product development teams to get involved e.g. importing large amounts of data.

Support requests require effort from teams so it’s important to measure this. If the team is constantly supporting one sort of request - it is probably best to work out a way to enable end-users to do this so in the future to free up the development team to work on something else.

If a support request comes into the development team because of an escaped defect, then it should be classed as an escaped defect.

Support Data

The other part of support is data from a help desk system. This can help provide quantified data to help with decisions for prioritisation about what to work on next. It can also help highlight areas of the system that may need work which could include

Fixing of bugs

Training for a particular feature

Worth looking at the UX design

No one using it - maybe needs marketing support to aid feature adoption

Lead and Cycle Time

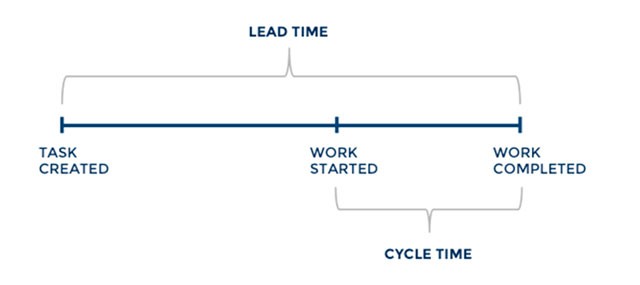

Lead time measures the total time from the moment a story enters the product backlog until it is completed and released to end-users. Some teams might even measure if from when something has added the roadmap.

It is designed to measure the total time for a requirement to be realised and start earning value. It measures the entire system from end to end and reducing the lead time should mean that the pipeline for creating and new delivering ideas is becoming more efficient.

Cycle Time

This is a section of the lead time, teams normally measure this from when something gets started on to when it is delivered. If this is very long or items in sprints are never delivered then you are not adding value for end-users.

Team Morale

From the agile principles, organisations should build projects around motivated individuals. Give them the environment and support they need, and trust them to get the job done.

One way to measure if a team is motivated is to check the teams moral.

The psychologist Verwijs suggests to measure team morale by asking team members to rate the following statements on the scale from 1 to 5 (or from 1 to 7, as it more nuanced):

I feel fit and strong in my team

I am proud of the work that I do for my team

I am enthusiastic about the work that I do for my team

I find the work that I do for my team of meaning and purpose

Turnover

Related to team morale, staff turn over provides a good indication if the team are happy, motivated and learning. People are more motivated when given autonomy and when engaged at work. The purpose is becoming more important for people than profit.

Employee turnover is calculated by dividing separations by the total staff: employee turnover = number of separations / average number of employees.

So if you lost 3 people in the past year out of a staff of 46, employee turnover = 3 separations / 46 employees = .0652 = 6.52% annual turnover.

If you had three staffers quit last month, and your total team is 46 employees, employee turnover = 3 separations in a month / 46 employees = .0652 = 6.52% monthly

Number of Experiments

In order for teams and products to improve, experiments need to happen.

Experiments also provide the team with variation and a break from the monotony of daily work.

From the agile principles teams should “At regular intervals, the team reflects on how to become more effective, then tunes and adjusts its behaviour accordingly.”

So what are examples of experiments

Trying a new tool for collaboration or to improve the way the team works or engage with users.

Working with customers or end-users to prototype

Trying a new way of working

Looking at some new technology - have a hackathon

Customer Satisfaction or Happiness

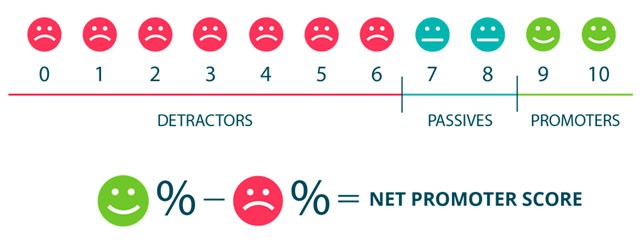

Many teams will use the Net Promoter Score (NPS), However, this can be considered a vanity metric

Net Promoter Score (NPS), calculated for a software release, measures whether users would recommend the software to others, do nothing, or recommend against using it. It is an important gauge of customer satisfaction.

If customers are recommending this new release to others, that is a clear indication of success. If not, you can use this as a warning metric and use other data to understand what’s wrong.

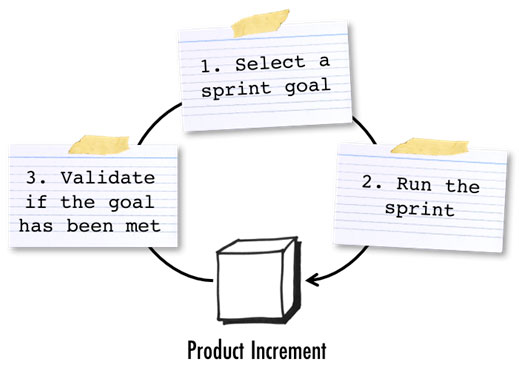

Sprint Goal

Should teams possibly look at how many times they hit the sprint goal or is this another vanity metric?

The sprint goal helps teams focus during the sprint and answers three questions:

Why are we carrying out the sprint?

How do we reach the sprint goal?

What metric tells us the goal has been met?

So why measure it? You will end up with a qualitative assessment of a scrum team’s work. Not just how many story points are completed, but how frequently the objectives of the business are met.

Maybe just a log of Smashed it of didn't quite smash it?

Team Health Check

Healthy teams will be able to work better together.

This is not talking about medical checks for team members its more about the environment they are working in and how they think they are working together.

This breaks down into multiple areas of the team e.g. culture, quality, learning…. and more and provide an outcome to show which areas are worse than others and where to focus on.

One suggested the approach is to give all the individuals cards to allow them to rate different areas of the team.

Each team individual can then decide how they feel on each topic. Topics could include

The outcome of the exercise will provide a grid, something like the below. This then gives you a clear visualisation to decide what to prioritise and focus on and try and improve until the next team health check is done again.